Data processing

Experts in Extracting, transforming and loading processes, handling large datasets.

OPTIMIZE THE ENTIRE LIFECYCLE OF YOUR DATA

We are experts in Extracting, Transforming and Loading (ETL) large datasets. We can easily work with big data for your projects based on your needs and specifications.

What is ETL?

ETL is a data integration process that combines data from multiple data sources into a single, consistent data store that is loaded into a target destination or system.

Extract

Data is extracted from diverse sources with the aim to collect relevant data into a centralized location for further processing.

Transform

Data undergoes transformation and cleansing to ensure consistency, quality, and compatibility with the target destination.

Load

The transformed data is loaded into the target destination.

USE CASES

DATA PROCESSING USE CASES

We built experience in ETL processes, standardizing large datasets to serve as input to different projects, such as;

Data cleaning:

We meticulously cleaned attributes for 75,000 schools in Peru, preparing them for importation into OSM to enrich the map. Our aim is to enhance accessibility to educational resources and infrastructure across the country.

Data standarization:

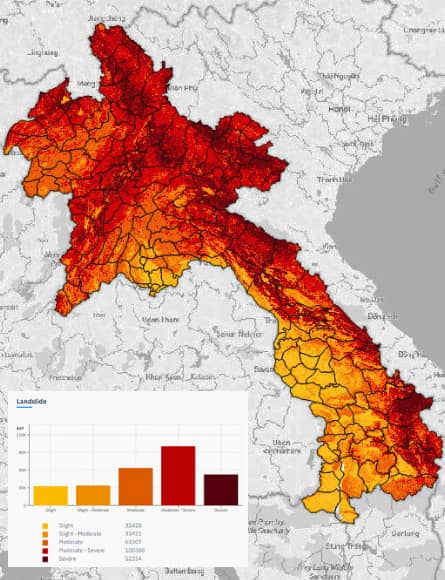

We standardized numerous geospatial datasets from various sources and formats (raster, vector, tabular, etc) to clean and transform them, generating added value to projects.

Data fetch:

We supported the OpenAQ organization, fetching and standardizing data from a variety of sources to ingest global air quality dataset to the OpenAQ platform.